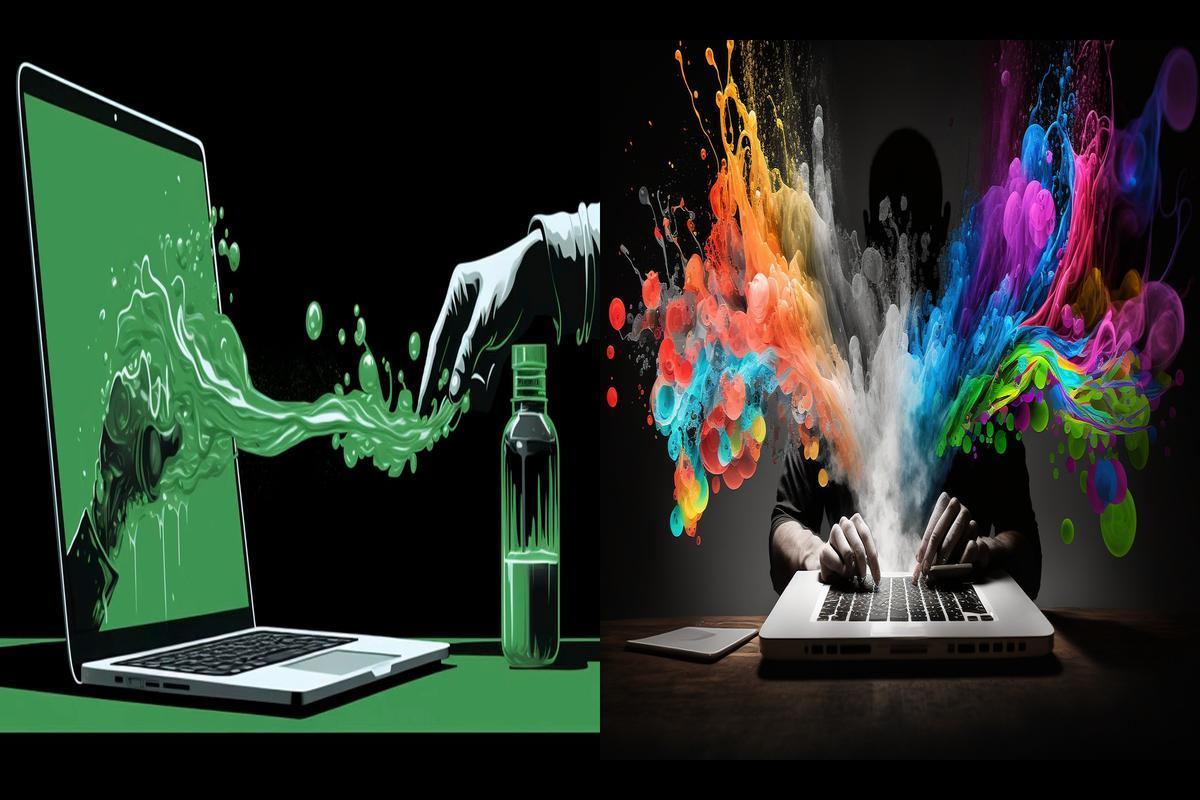

News: Significant advances have occurred in Artificial Intelligence (AI). The introduction of advanced AI models such as DALL-E 2 and Midjourney has revolutionized the creation of unique lifelike images derived from text symbols. However, a critical issue has arisen regarding the unauthorized use of artists’ creations to train these AI models. Naturally, this led to frustration and anger among artists because their works were being used without permission and acknowledgment. Artists now have in their hands the antidote called Nightshade, a power source that allows them to “poison” AI models as a countermeasure.

Encouraging artists with Nightshade

Generative AI models like Midjourney and DALL-E 2 use neural networks to analyze existing art collections, generating new images in the process. Unfortunately, these AI systems access datasets from publicly available online platforms without proper authorization, leading to dishonest use of artists’ works and understandable anxiety among them.

Developed by researchers at the University of Chicago in response to this challenge, Nightshade provides artists with proactive methods to protect their creativity. Nightshade’s web application allows artists to upload their artwork, allowing it to be analyzed in depth. Pixel-by-pixel improvements are introduced to distort what the AI models have learned from the original artwork. Artists can intentionally download false information by creating a modified image that closely resembles the original. When AI models are trained on these “poisoned” works of art, they produce meaningless results in generating new images.

Nightshade empowers artists, giving them a degree of control in the age of AI by reducing their artwork to the benefit of unauthorized model training. However, it is important to acknowledge certain limitations. Artwork with slight texture and flat color may be distorted during the etching process. In addition, the effectiveness of Nightshade depends on the widespread collaboration between artists, and the platform currently does not have a direct payment method for artists, which is a significant concern in the creative community.

Exploring the ethical implications

The rise of AI art has sparked complex discussions about licensing, intellectual property rights, and legal issues related to AI-generated artworks. Resources like Nightshade actively contribute to these ongoing policy discussions, shedding light on the ethical issues in the art of AI. In the midst of a changing AI art landscape, it is important for artists to re-establish control over their creations. Looking ahead, there may be an exploration of licensing frameworks and fair compensation mechanisms for artists engaged in the AI domain.

Nightshade serves as a powerful tool for AI model training for artists who want to prevent unauthorized use of their work. Artists deliberately distort aspects of their artwork, reducing the effectiveness of AI models in generating new images. However, it’s important to acknowledge Nightshade’s limitations, including minimal textures and possible distortions in flat-colored artwork, as well as the need for extensive coordination between artists. Ethical issues surrounding AI art are gaining attention, and ongoing discussions on licensing and artist compensation are set to shape the direction of this growing field.

Frequently Asked Questions:

1. How does Nightshade work?

A. Nightshade works by analyzing artists’ artwork and making pixel-by-pixel changes to distort the features learned by AI models. This modified image produces meaningless results when used in AI model training.

2. Are there any restrictions to using Nightshade?

d. Yes, Nightshade can cause distortion in artwork with small textures and flat colors. In addition, Nightshade requires coordination between artists to maximize its effectiveness.

3. Does Nightshade pay artists directly?

A. Nightshade does not pay artists directly. However, unauthorized use of AI model training allows artists to protect their creations.